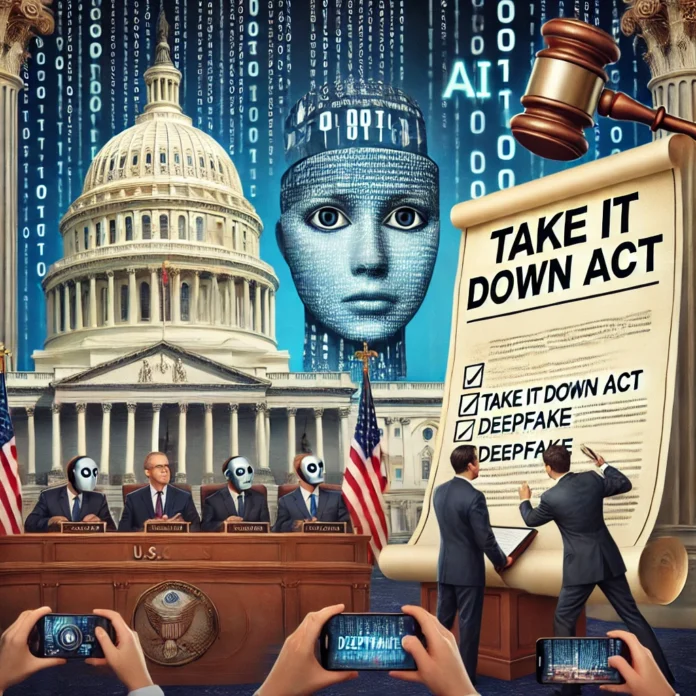

In a historic move, the U.S. Congress has passed the ‘Take It Down Act,’ the first major federal legislation aimed at addressing the growing threat of AI-generated deepfake pornography and non-consensual intimate imagery. The bill, which received overwhelming bipartisan support, mandates that online platforms remove such content within 48 hours of notification and criminalizes its distribution.

A Bipartisan Effort Rooted in Advocacy

The legislation was propelled by the courageous testimonies of teenagers Elliston Berry and Francesca Mani, who became victims of AI-generated explicit images. Their advocacy, alongside support from Senator Ted Cruz and First Lady Melania Trump, galvanized lawmakers across the political spectrum to take action. The House passed the bill with a 409-2 vote, following unanimous approval in the Senate.

Key Provisions and Enforcement Mechanisms

- Mandatory Removal: Online platforms are required to delete reported non-consensual deepfake content within 48 hours.

- Criminalization: The creation and distribution of such content are criminal offenses.

- FTC Oversight: Enforcement falls under the Federal Trade Commission’s authority to regulate deceptive trade practices, circumventing the contentious Section 230 debates.

Support and Concerns

Tech giants like Meta and Snapchat have endorsed the bill, recognizing its balanced approach to regulation. However, civil liberties organizations have raised concerns about potential overreach and the suppression of lawful speech. Critics also question the FTC’s capacity to enforce the law effectively, especially given recent administrative changes.

Implications for the Future

The ‘Take It Down Act’ sets a precedent for future AI-related legislation, highlighting the need for robust frameworks to protect individuals from emerging technological harms. Advocates hope this law will pave the way for broader reforms in online safety and AI governance.