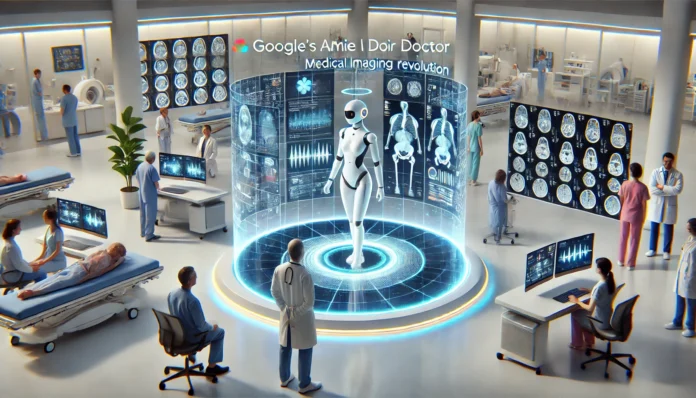

In a leap that could redefine the future of medicine, Google has unveiled a major upgrade to its Articulate Medical Intelligence Explorer (AMIE), an advanced AI system now capable of interpreting medical images such as X-rays, MRIs, and CT scans. This breakthrough promises to transform diagnostic medicine, offering a glimpse into a future where AI not only augments but potentially surpasses human expertise in certain clinical settings.

Multimodal Intelligence: From Conversation to Vision

AMIE was already recognized for its sophisticated diagnostic dialogue, previously matching or exceeding the empathy and accuracy of human primary care physicians in simulated studies. However, its inability to analyze visual medical data limited its real-world utility. The newly enhanced AMIE bridges this gap: it can now intelligently request, interpret, and reason about visual medical information during diagnostic conversations, integrating image analysis with patient-reported symptoms for a more holistic assessment.

“Imagine an AI that can not only listen to your symptoms but also look at your scans and tell you what it sees, all while explaining its reasoning in a clear, understandable way. This is the promise of multimodal AMIE, and it’s a giant leap towards more comprehensive AI-assisted healthcare.”

How It Works: The Power of Multimodal AI

The upgraded AMIE leverages multimodal learning, a cutting-edge approach that allows AI systems to process and synthesize information from multiple sources – in this case, both text (patient dialogue) and images (medical scans). During a diagnostic session, AMIE can:

- Request relevant medical images based on the conversation.

- Analyze X-rays, MRIs, or CT scans for abnormalities.

- Integrate findings from images with patient history and symptoms.

- Engage in a dialogue with patients or clinicians, explaining its reasoning and suggesting next steps.

This capability not only speeds up diagnosis but also reduces the risk of human error, especially in high-volume or resource-limited settings.

Implications: A New Era for Healthcare

The implications of Google’s breakthrough are profound:

- Enhanced Diagnostic Accuracy: By combining visual and conversational data, AMIE can catch subtleties that might escape even experienced clinicians.

- Accessibility: AI-powered diagnostics could bring high-quality healthcare to underserved regions where medical specialists are scarce.

- Efficiency: Automated image analysis can help hospitals manage growing caseloads, reducing wait times and improving patient outcomes.

While the technology is still in the research phase, early results suggest that multimodal AI like AMIE could soon become an indispensable tool in clinics and hospitals worldwide.

Challenges and the Road Ahead

Despite its promise, the integration of AI into medical diagnostics raises critical questions about transparency, accountability, and the evolving role of human clinicians. Ensuring that AI recommendations are explainable and that patient privacy is protected will be essential as these systems move toward clinical deployment.

Google’s AMIE is currently being tested in controlled research environments, but industry analysts expect rapid adoption as regulatory frameworks catch up with technological advances.